[responsivevoice_button rate=”1″ pitch=”1.2″ volume=”0.8″ voice=”US English Female” buttontext=”Story in Audio”]

Microsoft launches deepfake detection tech ahead of the 2020 election

- Microsoft on Tuesday announced the launch of Microsoft Video Authenticator, a tool designed to spot when videos have been manipulated using deepfake technology.

- Deepfakes are typically videos that have been altered using AI software, often to replace one person’s face with another, or to change the movement of a person’s mouth to make it look like they said something they didn’t.

- Microsoft said it’s inevitable deepfake technology will adapt to avoid detection, but that in the run-up to the election its tool can be useful.

- Visit Business Insider’s homepage for more stories.

Microsoft is trying to head off deepfake disinformation ahead of the 2020 election by launching new authenticator tech.

In a blog post on Tuesday, Microsoft announced the launch of a new tool called Microsoft Video Authenticator, which can analyze photos or videos to give “a percentage chance, or confidence score, that the media is artificially manipulated.” For videos, the tool can give a percentage chace in real-time for each frame, it wrote in its press release.

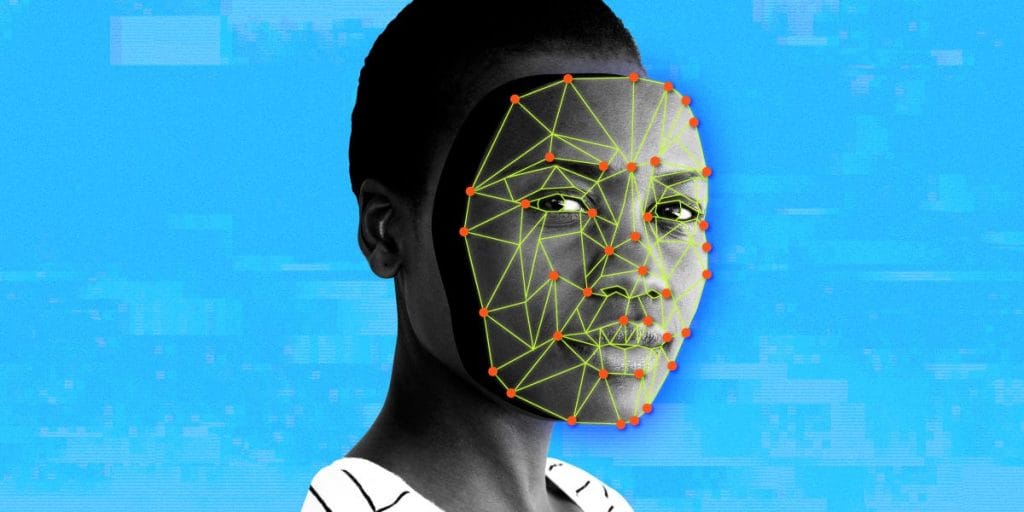

The term deepfake typically refers to videos where AI software is used to manipulate visuals, for example replacing one person’s face with another’s, or changing the movement of a person’s mouth to make it look like they said something they didn’t. Deepfake technology can also alter still images and audio.

Microsoft’s tech looks at the tiny imperfections at the edge of a deepfaked image, which may result in grayscale or fading undetectable by the human eye.

The company also said it was releasing tech that will help flag manipulated content. It works by allowing reliable content producers such as news organizations to affix hashes — a kind of digital watermark — to their content. Then, a browser extension called a reader checks for hashes on digital content, and matches them against the original to check if they have been altered.

Microsoft has teamed up with a media company coalition called Project Origin, which includes the BBC and the New York Times, for a trial of the new tech. “In the months ahead, we hope to broaden work in this area to even more technology companies, news publishers and social media companies,” Microsoft said.

The company said that while it expects deepfakes to get more sophisticated and harder to detect as time goes on, it’s hopeful that its tech can catch misinformation in the run-up to the 2020 presidential election.

“The fact that they’re generated by AI that can continue to learn makes it inevitable that they will beat conventional detection technology. However, in the short run, such as the upcoming US election, advanced detection technologies can be a useful tool to help discerning users identify deepfakes.