[responsivevoice_button rate=”1″ pitch=”1.2″ volume=”0.8″ voice=”US English Female” buttontext=”Story in Audio”]

Micron Introduces HBMnext, GDDR6X, Confirms RTX 3090

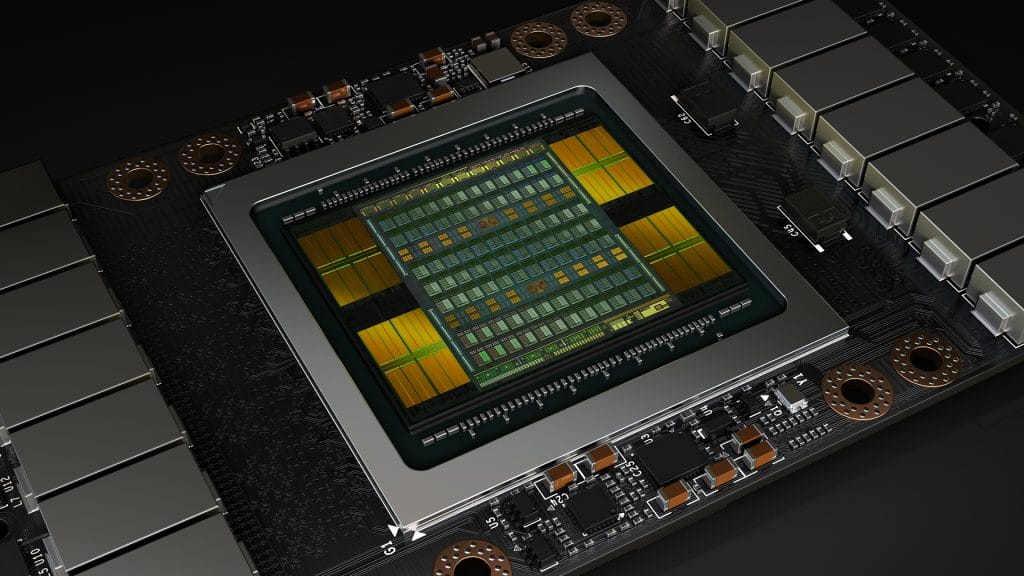

Micron has made a pair of announcements around next-generation memory technologies, HBMnext and GDDR6X. The latter will debut with the upcoming RTX 3090, which will offer 12GB of onboard RAM. There has been talk that Nvidia might bump high-end RAM capacities (and there’s no mention of an RTX 3090 Ti here), but for now, this is the clearest indication of what Nvidia may ship in the near future. This information appears to be somewhere between a leak and an official statement; the updated document cited by Videocardz (linked below) is based on this document (still available).

The data presented here appears to come from an updated version of a Micron report from a few years ago. GDDR6X will offer a slight power improvement over GDDR6, with increased bandwidth of 912GB-1TB per implementation as opposed to the estimated 672 – 768GB/s of GDDR6. Currently, GDDR6X tops out at 8Gb capacities and up to 21Gb/s of bandwidth per pin, allowing a 12-piece configuration to break the 1TB/s RAM bandwidth barrier. In 2021, Micron intends to introduce a 24Gb/s IC with 16Gb capacities, allowing for 2x the amount of VRAM in the same physical footprint.

As for the company’s HBMnext, it appears equivalent to SK Hynix’s HBM2E, where the “E” stands for “Evolutionary.” SK Hynix began production of HBM2E in July 2020, with up to 16GB of RAM per stack and 460GB/s of potential bandwidth — again, per stack. There’s been some curiosity about how the power consumption of these memory standards compares with each other — according to Micron, HBM2 and HBM2E retain an advantage compared to GDDR6/GDDR6X:

In the past, AMD has been willing to tap HBM for consumer cards. Using a more efficient memory subsystem on the Fury series and for Radeon VII helped improve the performance-per-watt rating on these GPUs compared with their Nvidia counterparts. More recently, the company has been using GDDR6 and this is expected to continue with RDNA2, though AMD or Nvidia might tap a next-generation flavor of HBM for workstation or enterprise hardware. Micron has said that it will pursue JEDEC specification for HBMnext. HBMn appears to be distinct from HBM3, though the data rate — up to 3.2Gb/s — is faster than any performance we’ve seen from a shipping HBM2E solution thus far. SK Hynix’s quoted 460GB/s per stack assumes a 3.6Gb/s transfer rate, but we haven’t seen any hardware actually use clocks that fast yet. Micron expects to ship its version of HBM2E/HBMnext by the end of 2022, with solutions available in 4-Hi/8GB and 8-Hi/16GB densities.

I’m still holding out hope that the cost of these 2.5D solutions will drop enough to make them practical for PC implementations at some point. Outside of Kaby Lake-G, we haven’t seen an HBM APU solution. With single stacks offering 410 – 460GB/s of transfer rate by 2022, there’s no reason a single-socket CPU + GPU couldn’t offer performance equivalent to a midrange graphics card. Hat-tip to VideoCardz for the report.

Now Read: